Integration and interoperability mechanisms in the Platform

In many scenarios, the Platform acts as the organization’s central platform (for example, as a Smart City Platform) and there is a set of verticals/developments/applications that must upload certain information to the Platform (for example, values, alarms, KPI’s, etc.) or consume information managed by the Platform (such as asset information).

The correct integration of the vertical services with the Platform is essential to ensure that the information that flows through the vertical is incorporated into the global information flow provided by the Platform, thus allowing the information to be exploited in a holistic manner.

The objective of this entry is to describe the integration mechanisms offered by the Platform through its technical components and its interoperability capabilities.

Platform Capabilities

Integration, whether unidirectional or bidirectional, will be carried out thanks to Onesait Platform’s out-of-the-box integration and interoperability capabilities, which allow its integration with any device or IT system in a very simple and agile way. Among these capabilities we find:

- Connectors: the Platform offers connectors in multiple protocols that allow sending, receiving and subscribing to the data managed by the Platform. Among these connectors, we have: REST APIs, MQTT Bus, Kafka Bug and WebSockets. Besides, the Platform allows you to create connectors for the Digital Broker and deploy them as plugins, or easily connect to existing buses and platforms through DataFlow.

- FIWARE NGSI-v2 Protocol Support: which allows interoperability with FIWARE platforms through its Orion Context Broker. We explain this in detail in this article on FIWARE NGSIv2 compatibility in the Onesait Platform.

- Multilanguage client APIs: the Platform offers multilanguage APIs to facilitate the development of clients that want to communicate with the Platform. Among these APIs we have APIs in Java, Kafka, Python, Javascript, Node, Android and Node-red. We explain it in detail in these Client APIs and Digital Broker guides.

- Open Source Platform: all components of the Platform are open source, including the client APIs and the components that support integration.

- Semantic approach: it allows information to be modeled in Entities, which make it possible to manage information completely agnostic of the technological protocol used to send the data, which means that, regardless of the protocol, the information is managed in the same way. This facilitates the integration only between the services and the Platform, but also the integration and global management of data between the different services. Within this approach, the Platform supports JSON-Schema and JSON-LD for linked data, including the definition according to schema.org.

- Digital Twins: a Digital Twin is a digital representation of a real-world entity or system, that does not act as a replacement for the physical object of the system it represents, but rather as a replica of it, allowing communication (testing, monitoring, command) of this physical device, without having to be attached to it. We explain this in detail in the article on the applicability and revelance of the Digital Twins in the Smart Cities environment.

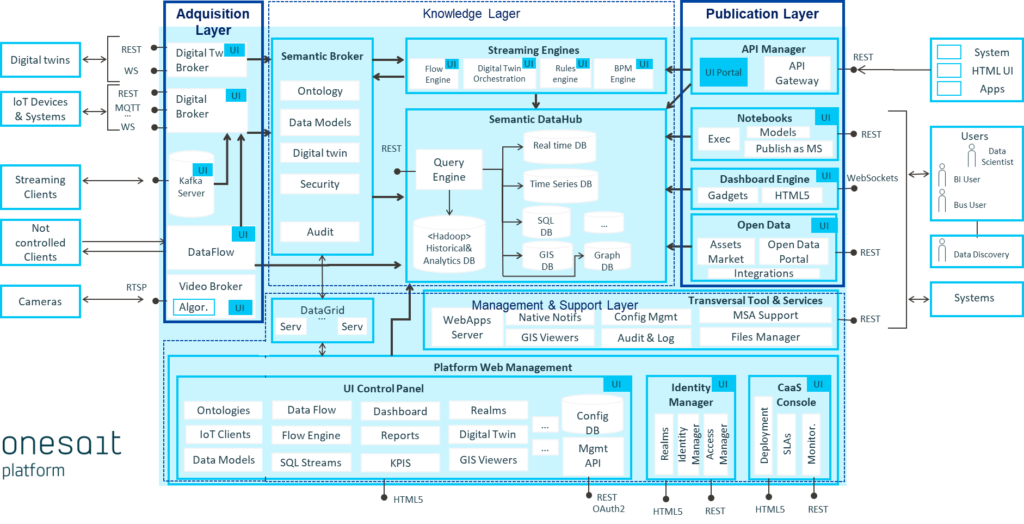

Platform modules that support it

The following image shows the Platform’s components, organized by layers, where the Acquisition and Publication Layers are highlighted, which include the components of the Platform to communicate with it (sending, query and subscription):

The described layers correspond to the Smart City Platform Layers Model of the UNE-178104 standard.

Acquisition layer

This layer offers the mechanisms to capture data from the collecting systems. It is also in charge of allowing the interconnection with other external systems that only consume data, and it abstracts the information from the collecting systems with a standard semantic approach.

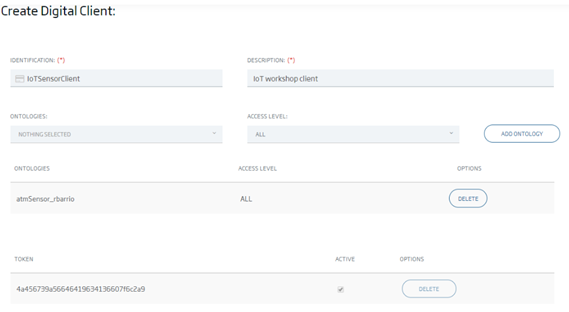

Digital Broker

It is the Platform’s Broker and the default data acquisition mechanism:

- It offers multiprotocol gateways (REST, MQTT, WebSockets, etc.).

- It offers two-way communication with the Platform’s clients.

- It offers multilanguage APIs (Java, JavaScript, Python, Node.js, Android, etc.) that allow each vertical to develop on their preferred language and platform.

It is the mechanism with which systems and devices are typically integrated.

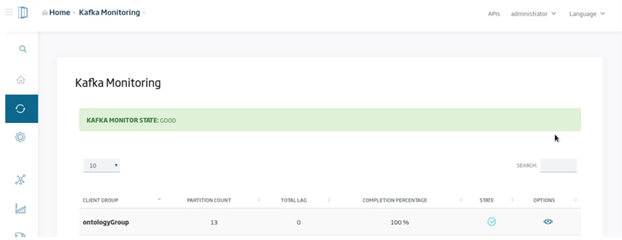

Kafka Broker

The Platform integrates a Kafka cluster that allows communication with systems that use this exchange protocol, generally because they handle a large volume of information and need a low latency.

DataFlow

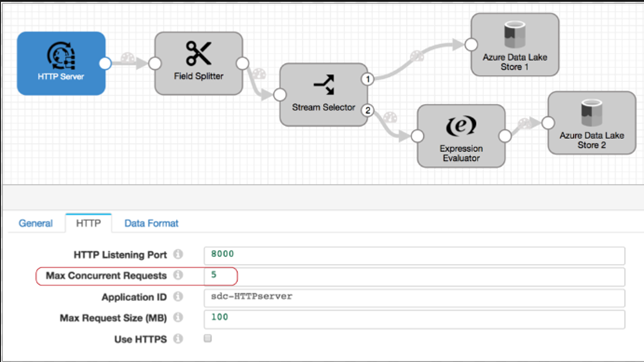

This component allows us to configure data flows from a web interface. These flows are made up of: one source (which can be files, databases, TCP services, HTTP, queues, the Platform’s Digital Broker, etc.), one or more transformations (processors in Python, Groovy, Javascript, etc. ), and one or more destinations (same options as source).

This is the mechanism to use when the Platform is going to collect information from the source system, and not when the source system sends information to the Platform or when the system/device is not integrated and dumps the information on an external bus. After this, a conversion on the Platform will be necessary.

Among its main characteristics, we find that:

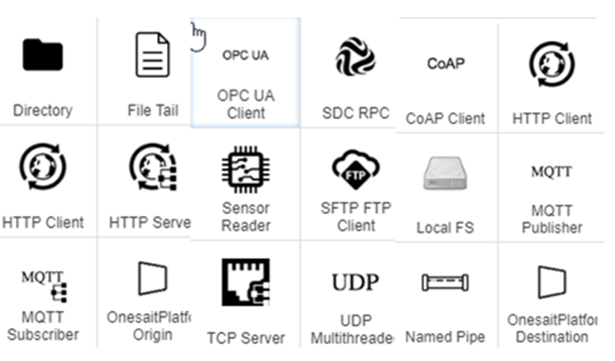

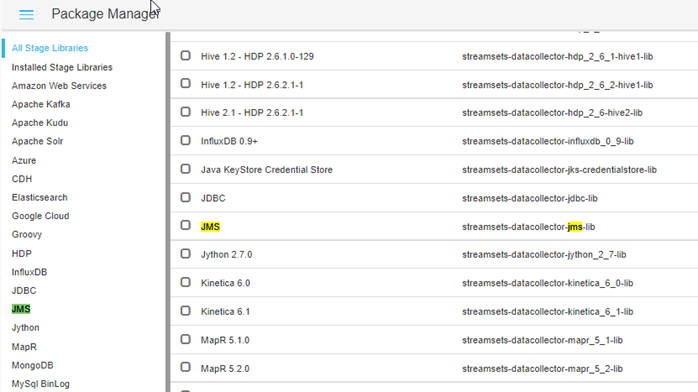

- It includes a large number of input and output connectors, such as connection with databases, Web services, Google Analytics, REST services, brokers, etc., which allows us to decouple in a simple and integrated way through a single tool (connectors) . Among the main DataFlow connectors, we can find Big Data connectors with Hadoop, Spark, FTP, Files, Endpoint REST, JDBC, NoSQL DB, Kafka, Azure Cloud Services, AWS, Google, etc.

- It offers a large number of connectors for IoT scenarios such as OPC, CoAP, MQTT, etc.

- It allows an external system to communicate with the Platform through one of the supported protocols (MQTT, REST, Kafka, JMS, etc.), then the Platform will orchestrate this data and route it to another system, or incorporate it into the Platform .

- Besides, there is a palette of components that support more specific protocols and that the Platform administrator can enable according to needs:

- All the information flowing through the component is monitored, audited and made available so that it can be exploited. In addition to this, the DataFlow component offers online traceability and monitoring:

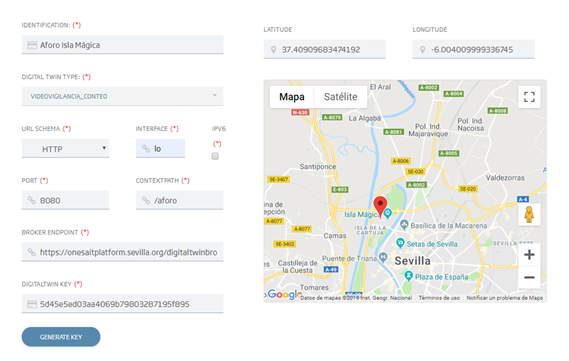

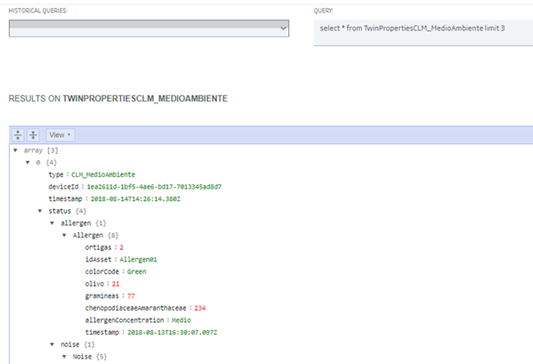

Digital Twin Broker

This broker allows communication between the Digital Twins and the Platform, and between several Digital Twins. It supports REST and Web Sockets as protocols.

The Platform, implementing the W3C Web of Things standard, which defines how a Digital Twin must be modeled and the communication APIs it must provide, provides complete support to materialize these concepts in digital systems that can be connected to each other, and collaborate according to rules established visually by a municipal management operator.

To achieve this, the Platform provides the following capabilities:

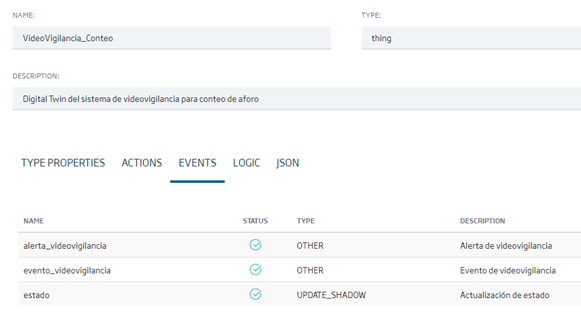

- Modeling of a Digital Twin from the Platform’s Control Panel: so that a user can precisely define the interface (inputs, outputs and status) of our Digital Twin. The modeling allows the use of the semantics included in the Platform (inputs, outputs and status can in turn be entities).

- Simulation of the Digital Twin: so that you can test the Digital Twin’s behavior, allowing the use of the Platform’s Artificial Intelligence modules.

- Implementation of the Digital Twin: once the Digital Twin has been modeled, the Platform can generate code in various languages to implement the functionality required for the use of the twin in operation:

- Digital Twin Status: our Digital Twins are securely connected to the Platform, and the Platform has a Shadow of their status:

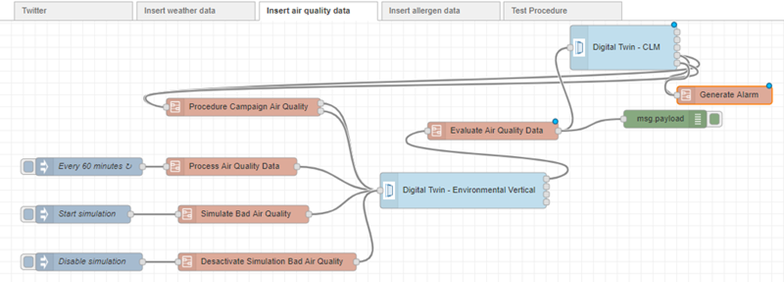

- Digital Twin Orchestration: once the Digital Twins are modeled, implemented and running, the Platform allows visually building a Digital Twin orchestration, so that the output of one Digital Twin can be mapped with the input of another Digital Twin, so that the second one reacts to state changes of the first one.

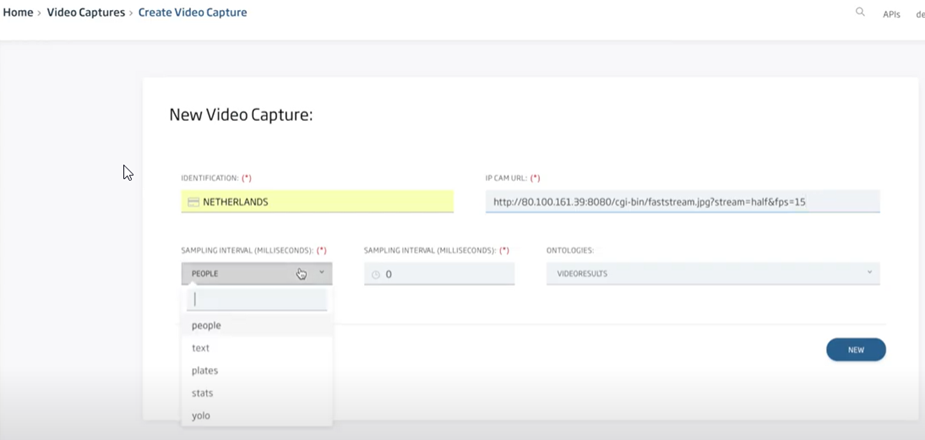

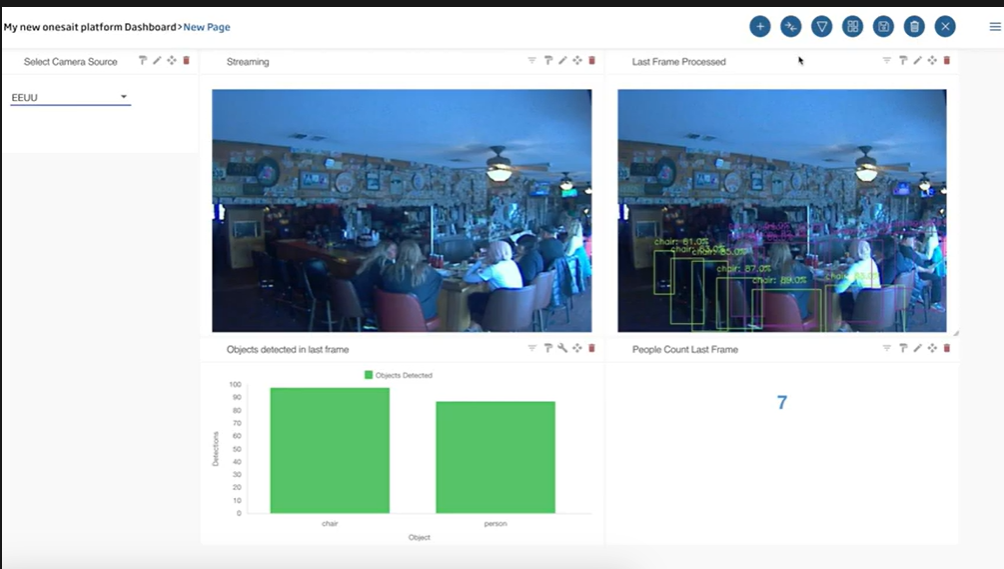

Video Broker

This component allows you to connect to cameras through the WebRTC protocol, and process the video stream associating it with an algorithm (people detection, OCR, etc.).

The result of this processing can be represented in the Platform’s Dashboards in a simple way:

Interoperability Layer

This layer offers interfaces on the knowledge layer establishing security policies and connectors so that external systems can access the Platform, and vice versa. It allows building services from the data of the Platform. To do this, one of the APIs offered to developers must be the native API to access to data from the knowledge layer.

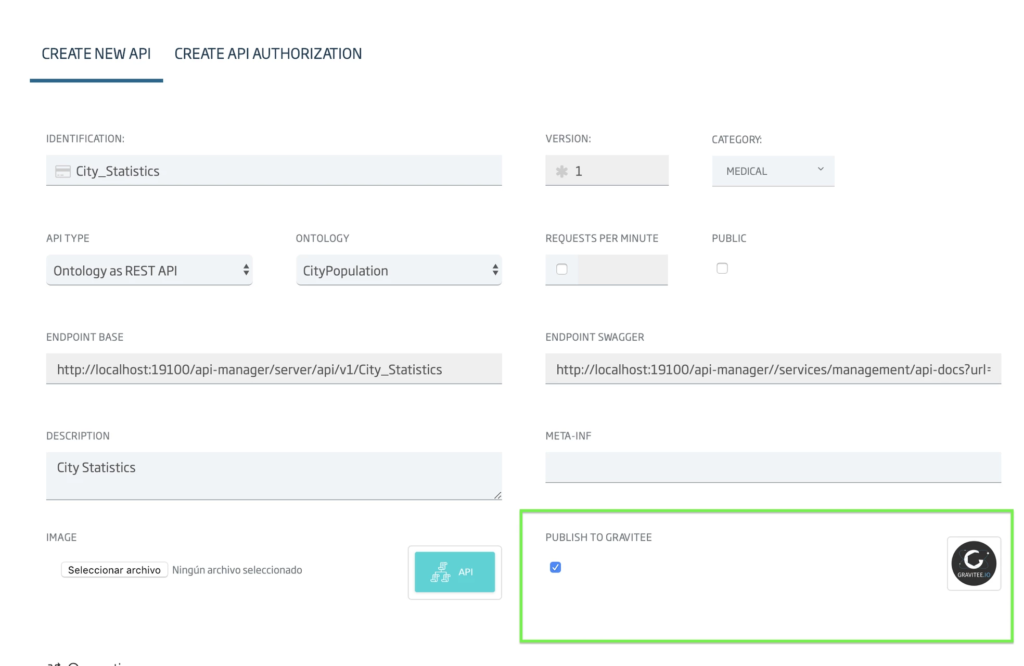

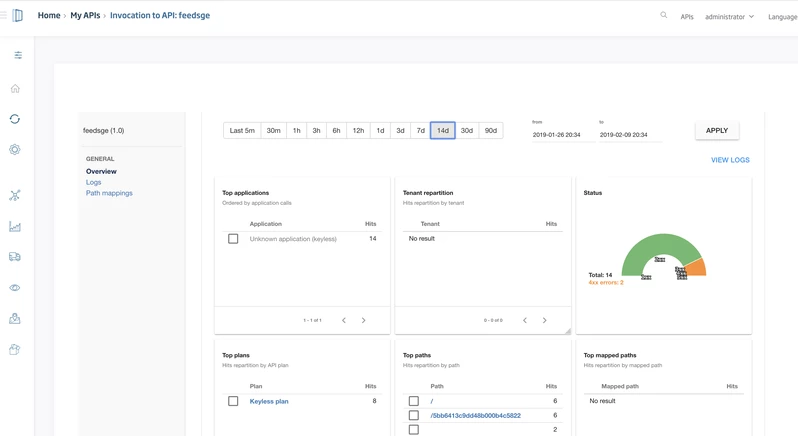

API Manager

This module allows the information managed by the Platform to be made available with REST interfaces. These APIs are created from the control panel and can be queries or updates. It also offers an API Portal to consume the APIs, and an API Gateway to invoke the APIs.

The Platform integrates the Gravitee API Manager for scenarios in which a more advanced control of the REST APIs is necessary, for example: custom security policies, throughput control, etc.

It is the typical mechanism with which web applications, portals and mobile applications are integrated with the Platform, in addition to the fact that current BI tools already offer connectors, as can be seen in these examples:

- How to represent data in Power BI from REST APIs?

- How to view data from a Platform REST API on QlikView?

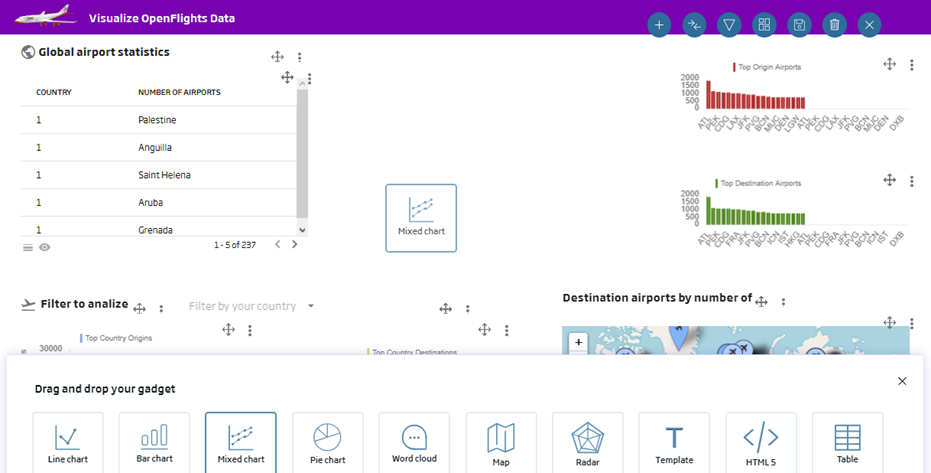

Dashboard Engine

This module allows, in a simple way, the generation and display of powerful dashboards on the information managed by the Platform, consumable from different types of devices, and with analytical and Data Discovery capabilities. All of this, centrally orchestrated through the Onesait Platform control panel, can make these Dashboards public, or share them with other Platform users.

These dashboards are nourished by the philosophy of powerful component frameworks such as React, Angular or Vue, being built from simple autonomous and reusable components called gadgets.

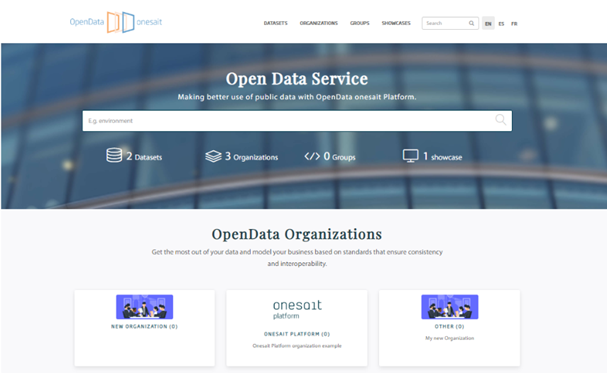

Open Data Portal

The Onesait Platform integrates the open source Open Data portal CKAN among its components.

CKAN is an Open Source data portal that provides tools to publish, share, find and use data. Its basic unit is the dataset, where the data is published, and which are made up of different resources and metadata. The resources store the data, allowing for different formats (CSV, XML, JSON, Shapefile, etc.) and, thanks to the integration with the Platform, the complete system has functionalities such as:

- Unified management in the Control Panel and the Platform.

- Complete management of datasets and resources.

- Publishing features as datasets.

- Publication of APIs as datasets.

- Integration with the Platform’s Dashboards.

- Full security integration of the Platform, authentications, authorizations, etc.

SDKs and APIS

The Platform offers REST APIs to access all the components of the Platform, both at the operation level and at the management level. It also offers multilanguage SDKs to communicate with the Platform in a simple way:

We explain this in detail in the Client APIs and Digital Broker guides.

We hope you found this interesting. If you have any questions, please leave us a comment and we will be happy to answer you.

Header image: スグブログ