MLOps: maturity levels and Open Source tools

Up until very recently, building Machine Learning (ML) and Artificial Intelligence (AI) models, and especially bringing them to production, was quite the challenge. There were challenges in obtaining and storing quality data, in the instability of the models, in the dependence on IT systems and also in the search for suitable profiles (a mixture of Data Scientist and IT profiles).

Luckily this is now changing and, although some of these problems do still exist, organizations have begun to see the benefits of ML models and we can say that the use of ML models in companies has become widespread.

However, the growth of ML model deployment brings with it new challenges such as managing and maintaining ML assets and monitoring the models. In this scenario, MLOps has emerged as a new trend, completely necessary in an AIML ecosystem that has the main goal of maximizing the performance of our ML models, increasing agility in model development, and improving ROI.

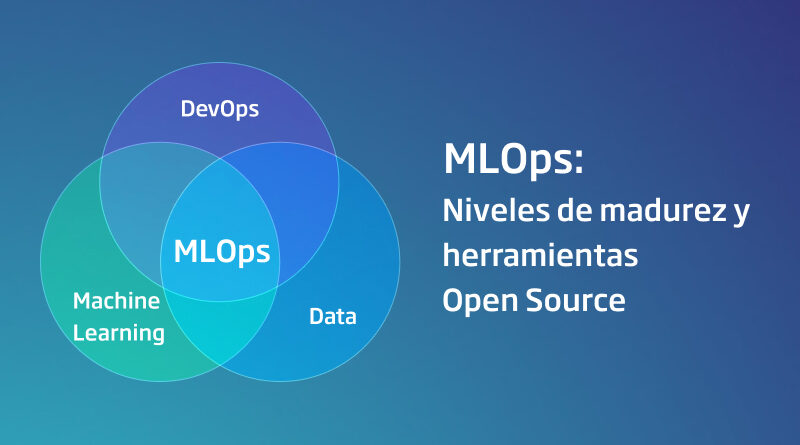

Components of MLOps

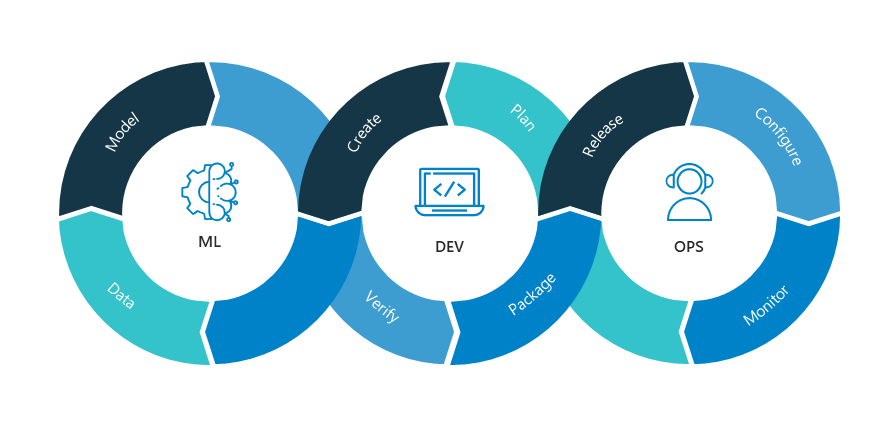

The goal of MLOps is to support the AIML ecosystem to manage the model life cycle through automation, consistently producing quality and reliable results without performance degradation, and ensuring the scalability of AIML products.

However, as may be expected, achieving this is not easy at all, meaning that a complete automated MLOps solution has several components including:

- Automated ML model building pipelines: Same as the concept of continuous integration and continuous delivery (CI/CD) in DevOps, ML pipelines are established to build, update, and make models ready for production accurately, efficiently and without problems.

- Model Servicing: This is one of the most critical components, providing a way to deploy models in a scalable and efficient way so that model’s users can continue to use the ML model outputs without loss of service.

- Model Version Control: This is an important step in an AIML workflow, enabling code change tracking, data history maintenance, and cross-team collaboration. This provides scalability to give experiments agility and reproduce the results of the experimentation whenever necessary.

- Model Monitoring: Helps measuring key performance indicators (KPIs) related to ML model health and data quality.

- Security and Governance: This is a very important step to secure access controls to ML model results and to track activities to minimize the risk associated with consumption of the results by an unwanted audience and bad actors. actors.TNowadays, data is the true asset, so it is essential that this data is only accessible by the intended audience.

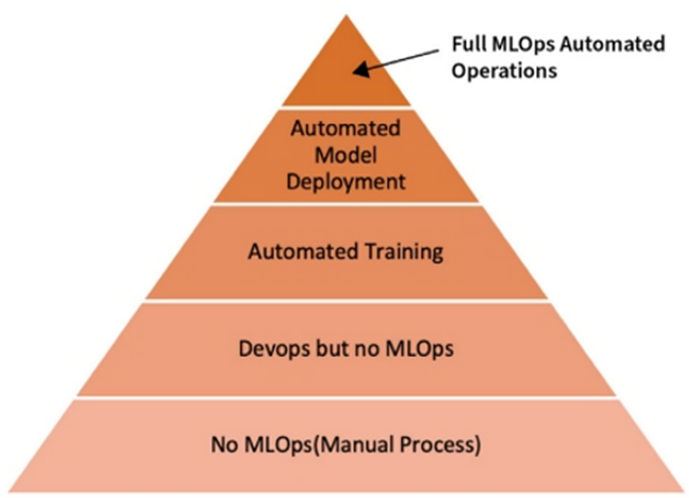

MLOps Maturity Assessment

Currently, most teams and companies do not have all these parts automated, and this is a process that requires time and effort. To track progress and measure the level of automation achieved with MLOps, different AIML providers have created their own maturity models, such as Google or Microsoft, which we will see below:

Microsoft MLOps Maturity Model

This model consists of five possible levels:

- Level 0: No MLOps.

- Level 1: DevOps without MLOps.

- Level 2: Automated training.

- Level 3: Automated Model Deployment.

- Level 4: Full automated MLOps operation.

Compared to others such as Google’s, Microsoft’s maturity model provides a better way to follow the MLOps processes in a company, as it is more detailed and specific.

Open Source tools to support MLOps

Once the components and maturity levels of an MLOps system have been discussed, we should see some of the interesting tools that we can find.

In the Open Source world, there are many tools available, although none of them covers all the necessary pieces in the MLOps field. Some of these tools that can be part of the MLOps ecosystem would be:

- MLFlow: This is an MLOps platform that can be used to manage the ML lifecycle from start to finish. It allows you to track model experiments, manage and deploy ML models, package your code into reusable components, manage ML logging, and host models as REST endpoints.

- Evidently AI: It is an open source tool used to analyze and monitor ML models. They have features that allow us to monitor the health of the model, and the quality of the data, and to analyze the data using various interactive visualizations.

- DVC: It is an open source version control system for ML projects. It helps with ML experiment management, project version control, and collaboration, and it makes models shareable and reproducible. It is built on top of GitHub version control.

The evolution of MLOps is still in its early stages, and the process will mature in the coming years. In addition, each company and/or team has their own issues: some need to quickly deploy models to production, others want an efficient way to govern ML models, and others may need help with model servicing and monitoring.