Demonstrator: Bank records flow

As we have been doing, we have developed another proof of concept of how you can work with different Onesait Platform components: DataFlow, Flow Engine, Virtual Entities, APIs, etc. to, in a very simple way, generate bank records for different entities from the data obtained by invoking a REST service.

We will carry out this demonstrator with a Low Code approach, since everything will be created from diagrams, flows, editors and forms from the Platform’s Control Panel. Specifically, we have used the CloudLab environment, our cloud testing laboratory:

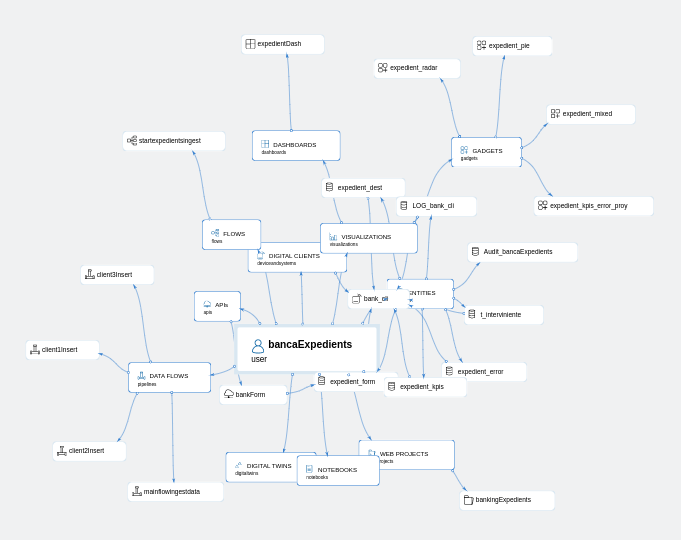

Demonstrator descriptor

The flow will validate the incoming data in different ways, for example, by validating some parameters of each record against virtual entities, or by checking whether any required parameter is not filled.

After performing the validations, the valid records will be redirected to the different insertion sub-flows depending on the entity, to complete the data for these, and to store the records in the corresponding entity, and the discarded files are stored in an Entity, with an error field indicating why they could not be inserted.

Finally, the results of the file record can be seen in a Dashboard that is loaded by accessing a web application that the Platform itself uses, so as not to have to develop it from scratch, you only have to indicate the Dashboards that will be accessed from the menu of the Web Application.

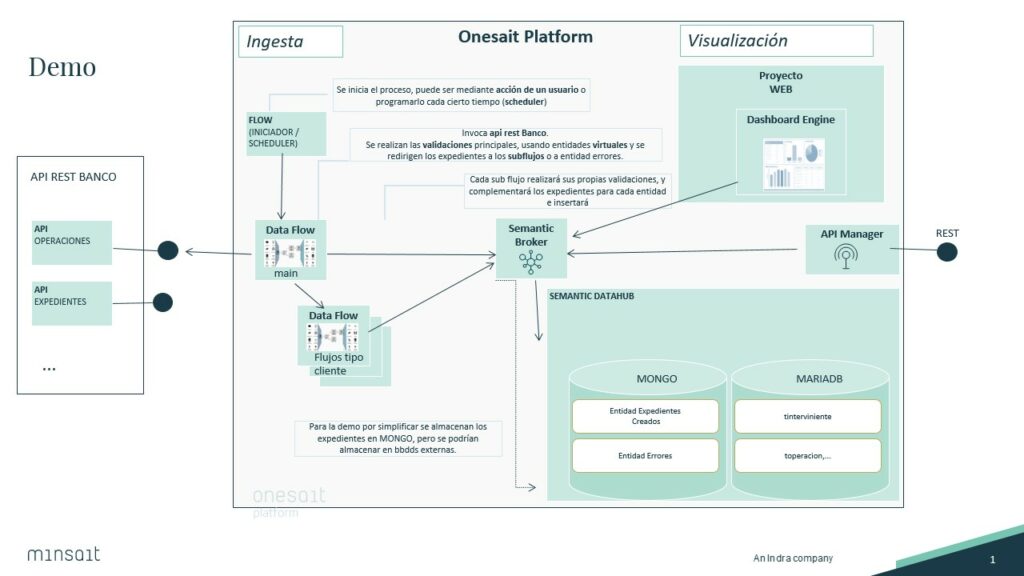

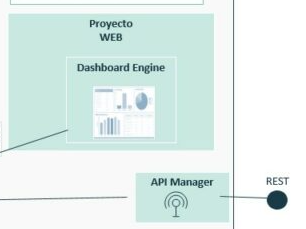

Next you can see a diagram showing the system:

What is done here?

First of all, we have a REST API from a bank, which provides us with the necessary information to generate the different files.

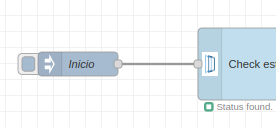

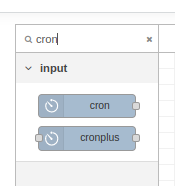

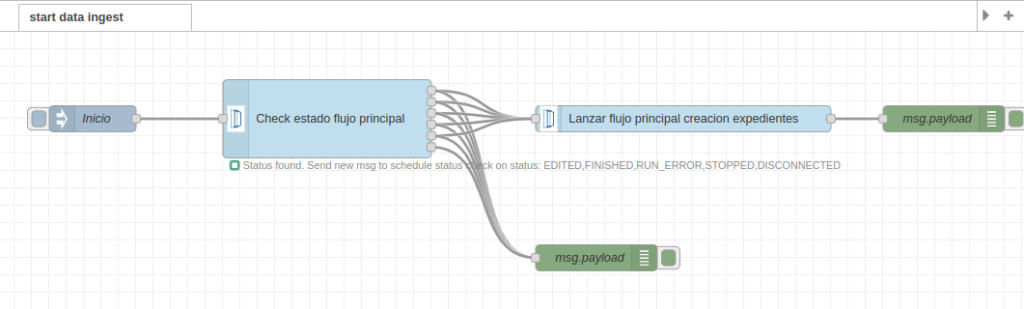

For the demonstration, we have a Flow («startexpedientsingest») that starts the process manually, although we could do that with a cron node and make it timed, periodically.

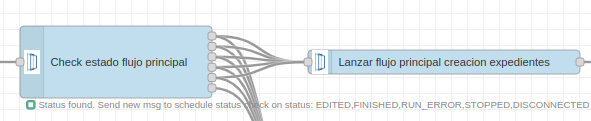

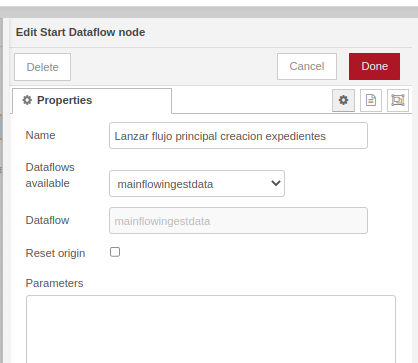

At this point, we have launched the execution, checking the status of the main DataFlow. If everything is viable, it is launched.

We would now be at this point in the diagram above.

In the main flow, the REST API of a bank is invoked. It will bring us the information for the demonstration. These are records with hundreds of fields, where some meet the requirements to become files and others do not, and end up being stored together with the error in the «expedient_error» entity.

In the diagram, we see this component:

This module acts as the Platform’s Bus or Broker. It offers connectors in different technologies such as Kafka, MQTT, REST, WebSockets, AMQP, etc., allowing different clients (systems and devices) to communicate with the Platform efficiently. If you want to know more about it, we have a detailed article on our Development Portal.

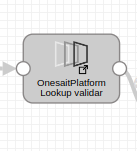

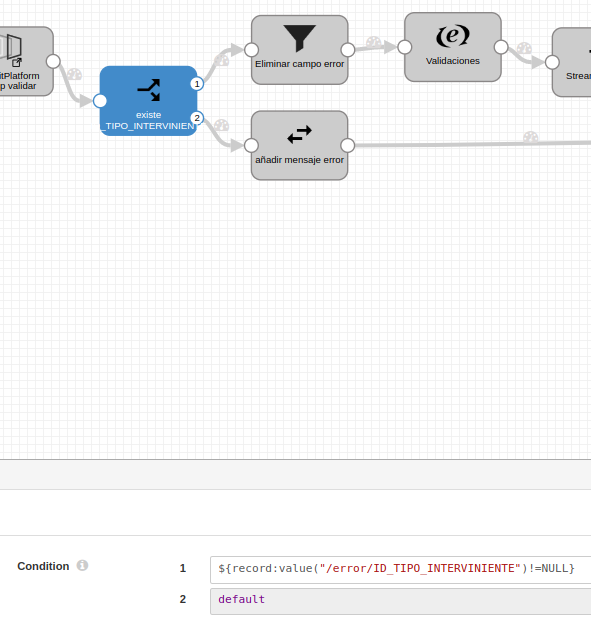

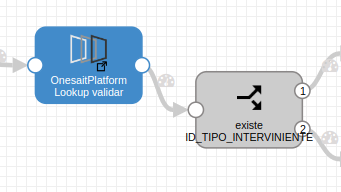

Further down, in the DataFlow section, we specify better what each component does, but we want to indicate that, in this component of the main flow, each record is being validated against a Virtual Entity, checking whether the type of participant used in the file does exist in this virtual entity that is a catalog of types of participants. We do this by simply adding a box to the flow, and mapping the record’s field and the table’s field.

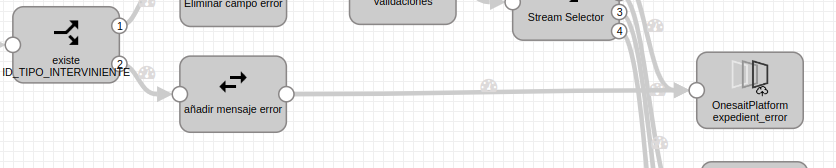

After the lookup against the Entity, we proceed to the validation. In it, if the parameter is filled in, that means that this type of participant exists, and the record continues on its way to be stored correctly. Otherwise, it will go to the error entity with its corresponding message.

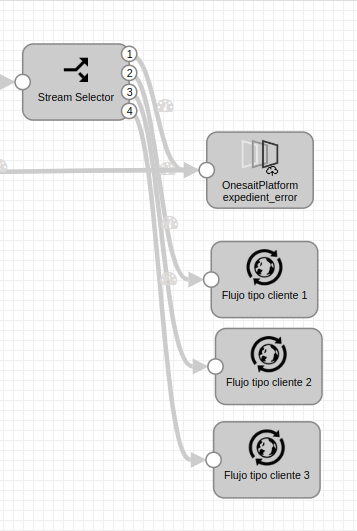

After passing the validations, each record goes to its corresponding subflow depending on whether it does (not) meet any validation criteria and the bank, since each validation will need the records to be completed or validated in a specific way.

This gives more flexibility since, if a new Entity were added, it would only be necessary to add a new sub-flow to the output of the main flow. We will not have a huge flow with all the functionality, but we will have it segmented, which is more sustainable.

Finally, we created a Dashboard that shows the information about this process, that is to say, the records created, errors, possible filter by days, etc. We have also created REST API that allows us to query the information in the files.

Components

Next we can see the different components that make up the demonstrator:

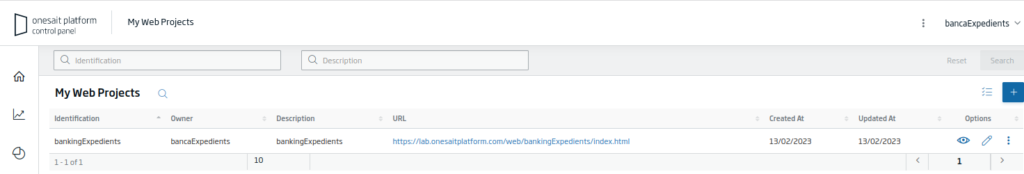

Web project

A web project has been deployed on the Platform:

This framework application allows for agile developments like this, since it has integrated user login with the Platform’s security.

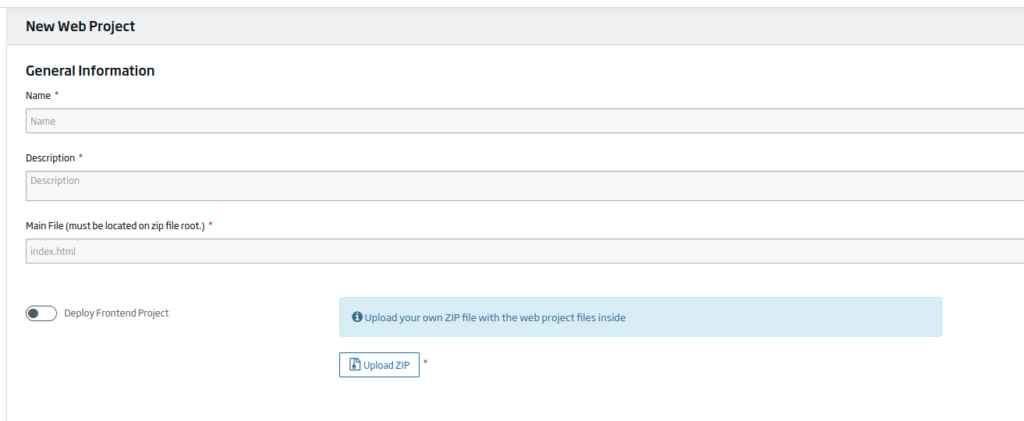

It can be used directly by modifying the configuration file where the Dashboards that will be displayed are indicated. To do this, when creating the web project:

We can check the «Deploy Frontend Project» option. With this, we will have the application available to add the Dashboards to display, thus having an application in a few minutes where we can display the Dashboards.

It also shows the information of the user who has accessed:

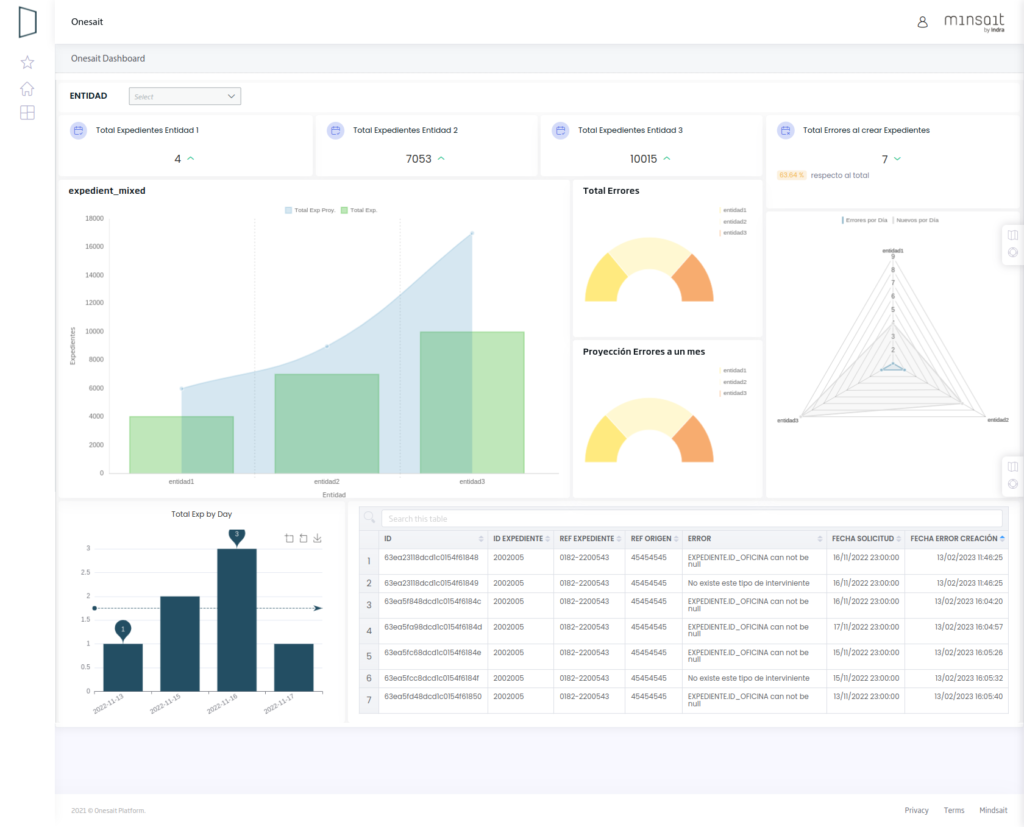

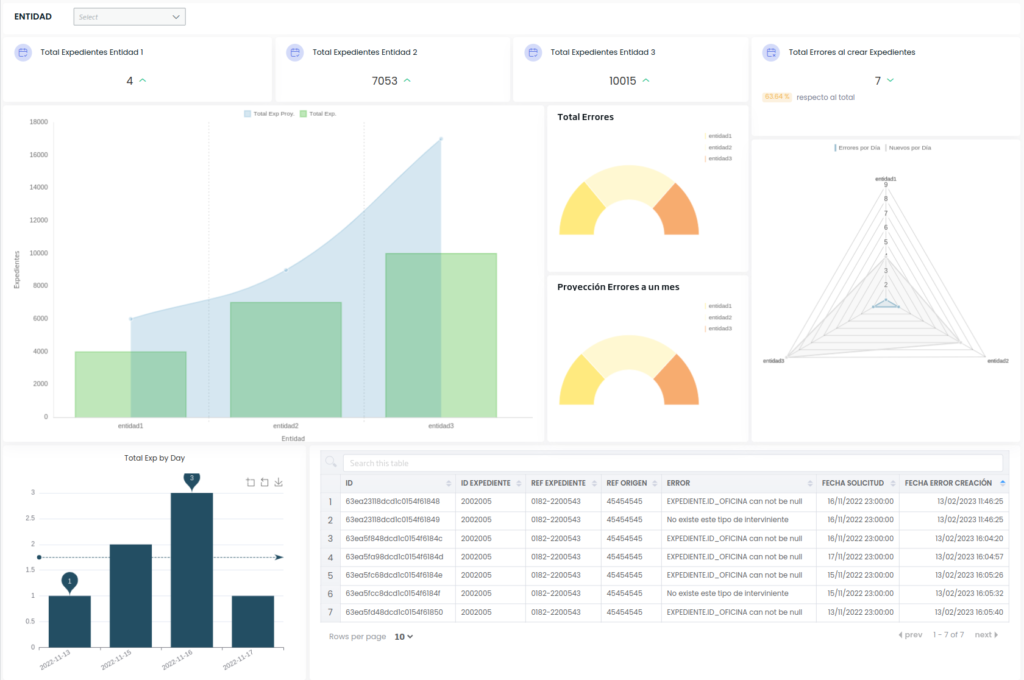

The Dashboard

We have developed a Dashboard that is displayed from the web project as seen previously. This dashboard is developed with the Onesait Platform Dashboard Engine.

It is made up of different elements.

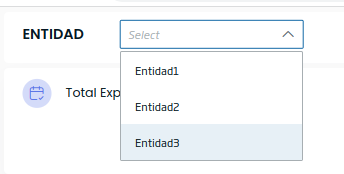

A multiple entity selector, which allows us to filter the data of the gadgets, to show only the values of the Entities that we want:

KPI Total files Entity 2

Shows the total value of records created for this entity.

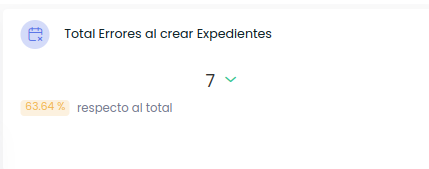

KPI Total Errors when creating Files

Shows the total number of errors stored in the «expedient_error» Entity. It is automatically updated if changes are made to the entity.

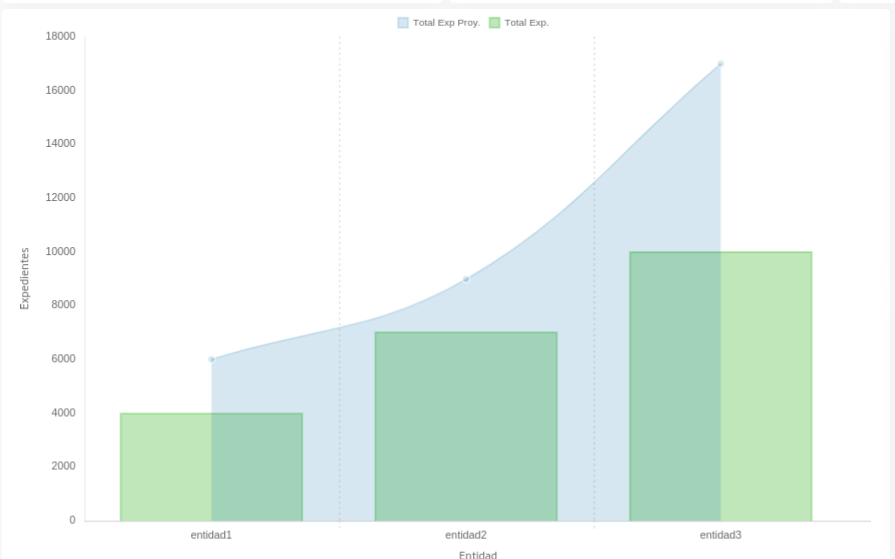

Comparison of Total files created by entity and projection to one month:

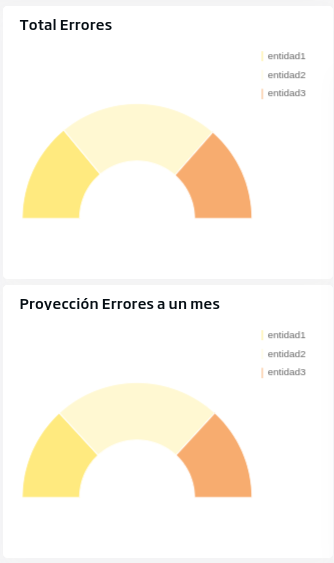

Total Errors and Total Errors for a month

Radar by entities that shows the average number of errors per day and files created.

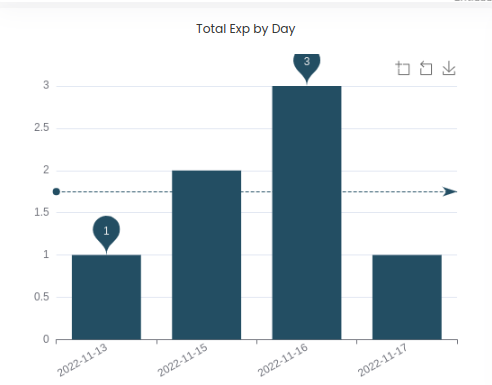

Total, files per day

In the bar graph, the x axis shows the dates and the y axis is for the total number of files created for that day. It has a number of possible actions:

- Clicking on the bars, wecan filter the error table to the right.

- We can use the zoom by activating it with these buttons, and download the graph in image format.

The bar graph also indicates the maximum, minimum and average values for the displayed values.

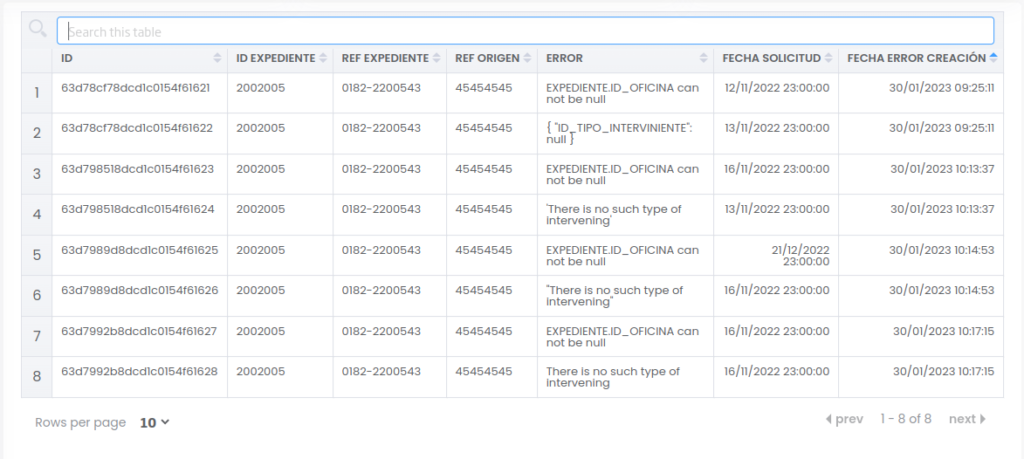

Error Table

Shows the files not created. We can filter the results by typing next to the magnifying glass icon and pressing «enter». The table also has ordering and pagination.

Flow Engine

We have created a flow that serves as a trigger for the main DataFlow. For the example, it is launched manually, although it could be timed or triggered when an API call arrives.

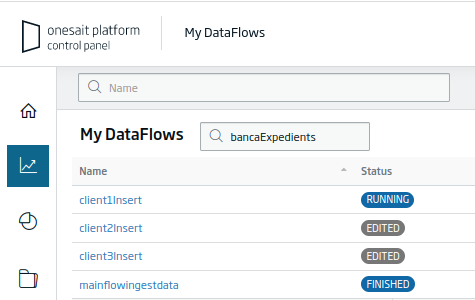

DataFlows

Four DataFlows have been developed:

A main DataFlow that, depending on the entity, redirects the file to an insertion subflow or another.

Un DataFlow principal que redirige dependiendo de la entidad el expediente a un subflujo de inserción u otro.

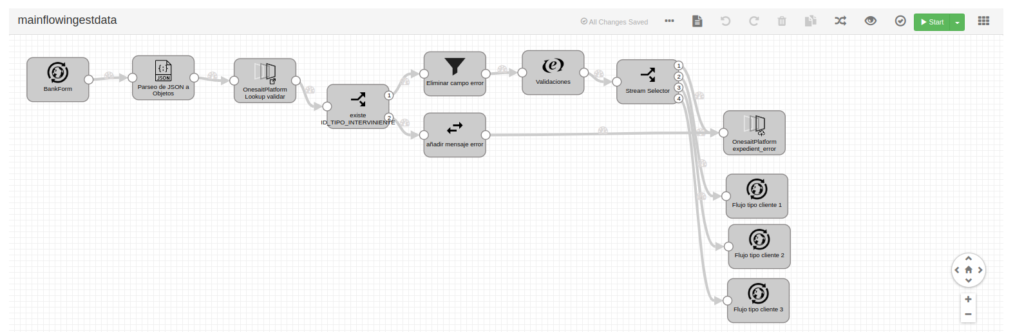

mainflowingestdata

When this flow starts, the REST API is invoked. This provides the records with the information needed to create the records. The input is parsed to work not with a String, but with objects.

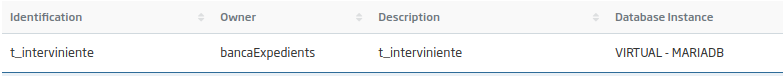

In this step, it validates whether the type of participant used in each record matches one of those stored in the virtual entity «t_Interviente». This virtual entity is created from a MariaDB database table. If it exists, the flow will continue.

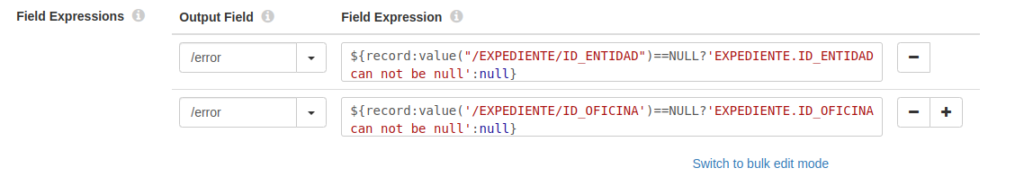

Otherwise, the erroneous record will be stored in an entity created for this purpose, recording the error that’s the cause for the file-to-be-created to be not valid:

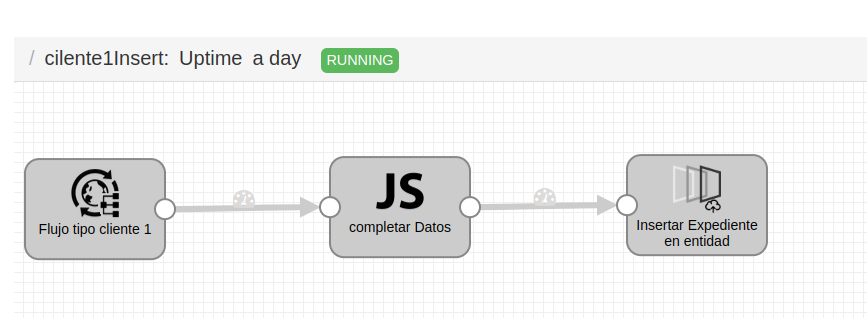

If the type of participant exists, the flow continues, performing the following validations in case any necessary field is empty.

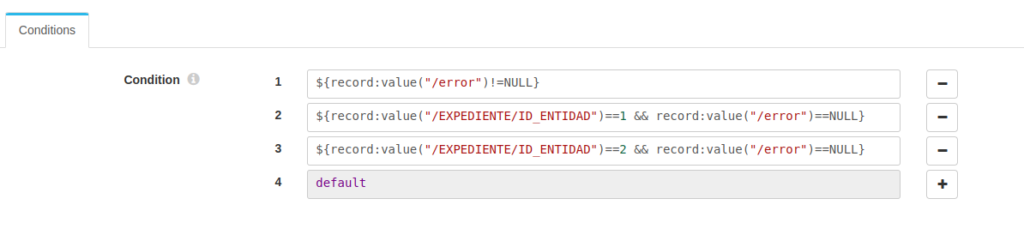

Finally, depending on the result of these validations and of the entity identifier, the records will be directed to one subflow or another:

Insertion flow

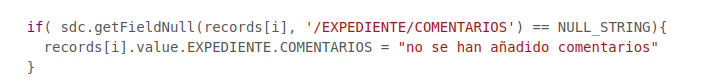

In this subflow, the records that have not previously failed are received, and the information is completed, to later store the file in the «expedient_dest» entity. For the example, if the field «EXPEDIENTE.COMENTARIOS» is null, it is loaded with a comment:

Another virtue of the platform DataFlow is that each of these data flows has several modes of operation, so we can create the flow in development mode or test it in debug mode. Another mode, as we show in the screenshot, is the execution mode, where we can see the information of the executions, summary, errors, graphs, etc.

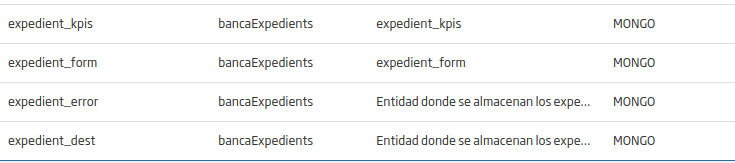

ENTITIES

expedient_dest

Entity where the created files are stored.

expedient_error

Entity where the files that have given an error when trying to create them in the DataFlow are stored.

expedient_kpis

Entity with sample data to simulate different KPIs of the files.

expedient_form

Entity that stores the data of the forms that are going to be created and that are served by the REST API in the main DataFlow.

t_interviniente

Virtual entity from a connection to a MariaDB database, from which the types of participants are extracted.

I hope you found this reading interesting and you have been able to verify that, with Onesait Platform, you can build very powerful Projects and Products from the Low Code approach. We encourage and challenge you again to register as users in our CloudLab environment, to try creating an entity, for example, and then displaying the content on a dashboard, or creating a data flow, or any of the thousands of things that you can do and test.

If you have any questions about it, do not hesitate to leave us a comment. Also, if you are interested in us showing you this demonstration live, do not hesitate to contact us to make an appointment at our contact email contact@onesaitplatform.com.

Header image: Kari Shea at Unsplash.