Onesait Platform deployment strategy

Today we’ll talk a bit about the strategy we follow when deploying our Platform.

Onesait Platform technological base

Containerization. Why containers?

Onesait Platform is based on a microservices architecture written in Java using the Spring Boot framework, and each of these modules or microservices runs inside a Docker container. There are many reasons why this way of packaging and running these microservices with Docker has been chosen, with the following one being of note:

Return on investment and cost savings

The first advantage of using containers is the ROI. The more you can reduce the costs of a solution, the more the benefits increase, and the better the solution.

In this sense, containers help facilitate this type of savings by reducing infrastructure resources, since fewer resources are needed to run the same application.

Standardization and productivity

Containers ensure consistency across multiple environments and release cycles. One of the biggest advantages of containerization is standardization, as it provides replicable development, build, test, and production environments. The standardization of the service infrastructure throughout the whole process, allows each team member to work in an environment identical to that of a production environment. As a consequence of this, engineers are better equipped to analyze and correct errors in an agile and efficient manner. This reduces bug fix times and time-to-market.

Containers allow you to make changes to images and control their version: For example, if anomalies are found in the operation of an entire environment when updating a component, it is very easy to rollback and return to a previous version of your image. This entire process can be tested in just a few minutes.

Portability, compatibility and independence of the underlying operating system

One of the main advantages is parity. In terms of containers this means that images run the same, no matter what server or laptop they run on or what operating system they run on – be it Windows, Linux or MacOS.

For developers, this means less time spent setting up environments, debugging environment-specific issues, and a more portable and easy-to-configure code base. Parity also means that the production infrastructure will be more reliable and easier to maintain.

Simplicity and faster setups

One of the key benefits of containers is the way it makes things simpler. Users can have their own configuration, put it in the code and deploy it without any problem. Because it can be used in a wide variety of environments, the infrastructure requirements are no longer tied to the application environment.

Agility in deployments

Containers manage to reduce deployment time. This is due to the fact that it creates a container for each process and does not boot an operating system.

One of the most important advantages of containers compared to Hypervisors is that it does not replicate a complete operating system, but only the minimum and essential for the application that you want to containerize to work correctly – and the images are much lighter and easier to move between environments. Furthermore, containers do not exclusively reserve operating system resources (memory, CPU) but rather share them with other running containers.

Continuous deployment and testing

Containers ensure consistent environments from development to production. Containers are prepared to keep all configurations and dependencies internally. Thus, it is possible to use the same image from the development environment to the production environment, ensuring that there are no discrepancies nor manual intervention.

Multi-cloud platforms

This may well be one of the biggest benefits of containers. In recent years, all major cloud computing providers, including Amazon Web Services (AWS) and Google Compute Platform (GCP), have embraced Docker availability and added individual support. Docker containers can run inside an Amazon EC2 instance, Google Compute Engine instance, Rackspace server, or VirtualBox, as long as the host operating system supports Docker. If that’s the case, a container running on an Amazon EC2 instance can be easily ported between environments.

Besides, Docker works great with other providers like Microsoft Azure and OpenStack, and can be used with various configuration managers like Chef, Puppet, Ansible, etc.

Isolation and security

In a system where a container malfunction occurs, this does not mean that the entire system on which it runs will stop responding or malfunction – that is to say, it ensures that the applications running in containers are completely segregated. and isolated from each other, giving you full control over the flow and management of the operating system. No container can see processes running inside another container.

Containerization. Why Docker?

Containerization technology is not new, having been implemented by Oracle on Solaris in the early 2000s as a measure of resource isolation and application portability. However, this technology did not mature until the arrival of Linux Containers, or LxC for short, with the use of Linux cgroups and namespaces, already incorporated natively into the Kernel.

Then came CloudFoundry with its own implementation of LxC, called Warden; CoreOS with Rocket (rkt); and the community with Docker – all of which use both cgroups and namespaces to limit and allocate operating system resources – However, Docker implements one more layer of abstraction with its own library: libcontainer.

Choosing Docker over the rest was simple:

- Widely supported by the Open Source community.

- Its use is widespread.

- It has the highest degree of maturity compared to the rest.

- Wide catalog of applications or “base images” of the most popular products: MySQL, MariaDB, MongoDB, Maven, Open JDK, etc.

- Most container orchestrators support Docker as a de facto standard, such us: Swarm, Cattle, Kubernetes/Openshift, Portainer, Mesos, Nomad, etc.

- For business environments they have an EE version.

Container Orchestration. Why Kubernetes?

If we can consider Docker as the de facto standard in application containerization, then Kubernetes could be considered as the de facto standard in Docker container orchestration. Kubernetes is written in Go and was initially developed by Google, then continued by the Cloud Native Foundation.

In this case, the choice of Kubernetes over other orchestrators is based on:

- Widely supported by the Open Source community.

- More than 10 years of maturity.

- Fully integrated with Docker.

- Based on open source.

- Prepared to work on Production systems allowing its deployment in HA and guaranteeing the HA of the applications (pods*) that are deployed.

- Supports clusters of up to 5,000 nodes and up to 150,000 running pods.

- Integrated graphic dashboard.

- Offered as PaaS in various clouds: Amazon (EKS), Azure (AKS), Google (GKE), Oracle (OCI).

- Implemented by Red Hat as Openshift in Enterprise (OCP) and Community (OKD) versions.

- Implemented and/or integrated by Rancher 2.

*In Kubernetes the minimum deployment unit is the pod. A pod is one or more running containers that share storage and network.

Deployment of Onesait Platform in k8s. Why Helm?

To automate, distribute and deploy the Onesait Platform on existing Kubernetes clusters, we have chosen Helm as the base technology for several reasons:

- It is an official Kubernetes project, developed for Kubernetes and maintained by the Cloud Native Foundation.

- It allows its use in both Kubernetes and Openshift clusters.

- It allows you to install and uninstall applications as well as manage their life cycle as packages or pieces of software.

- With Helm, you can write Openshift Operators (as well as with Ansible or Go).

Helm allows complex applications to be packaged to be deployed in Kubernetes, being able to “template” their files or manifests, such as Deployment, Service, PersistentVolumeClaim, Ingress, Secret, etc., in templates or Helm Templates, and packaged in a Chart. Once packaged, Charts can be distributed and versioned on Charts servers (Chart Museum) and installed on Kubernetes clusters.

CaaS Strategy

When choosing a CaaS to cover the complete life cycle of the Onesait Platform, you must bear in mind several considerations:

- In what scope is it going to be used (solutions or projects).

- Type of cost and/or licensing.

- Support.

- No vendor lock-in.

Digital solutions

For the company’s several Solutions/Products, two CaaS strategies have been followed during these years: the initial one, that contemplated the use of dedicated virtual machines and where the Platform was deployed through containers orchestrated with Rancher 1.6; and then the current one, to which the different Solutions should progressively migrate, based on the reuse of infrastructure and where both the Platform and the Solutions will be deployed in a single Openshift cluster.

During the month of January 2019, Onesait launched an RFP to decide which is the Platform for the deployment of reference containers in the organization – Among others, this included Microsoft with AKS, Red Hat with Openshift and Rancher 2 with RKE. After the release of this RFP, we decided to use Openshift as a technological base.

Rancher 1.6 + Cattle – “legacy” environments

The initial deployment strategy of the different Solutions and Disruptors of the organization, which use the onesait Platform as a technological base, is based on dedicated virtual machines where they deploy the different containers of both the onesait Platform and the Solution that uses it. These containers are managed by Rancher 1.6, a completely Open Source CaaS with Cattle, an orchestrator developed ad-hoc for Rancher.

With this strategy, the VM’s are provisioned in the Azure cloud platformed with CentOS in development and pre-production environments, while in production environments they are platformed with RHEL. This way, they have the support of Azure in the infrastructure and the support of Red Hat in the operating system.

Once the infrastructure is available, the installation of the base software in it (Docker and additional packages of the operating system) of the CaaS (Rancher) as well as the deployment and start-up of the Platform is managed with Ansible. Ansible is an Open Source IaaC (Infrastructure as a code) tool developed by Red Hat, which has been chosen over others such as Chef or Puppet for the following reasons:

- Based on the idempotence principle, the repeated execution of a playbook does not alter the operation of the system once the desired state has been reached.

- Doesn’t need agents running on the machines it manages.

- Very simple learning curve.

Installation with Ansible, simplifying, is done in the following steps:

- Installation of the base software (Docker, docker-compose, additional packages).

- CaaS installation.

- Deployment of the Platform in Cattle with docker-compose.

- Installation of orderly startup and shutdown scripts for those environments in which they require, for cost savings, the shutdown of the infrastructure at night and on weekends.

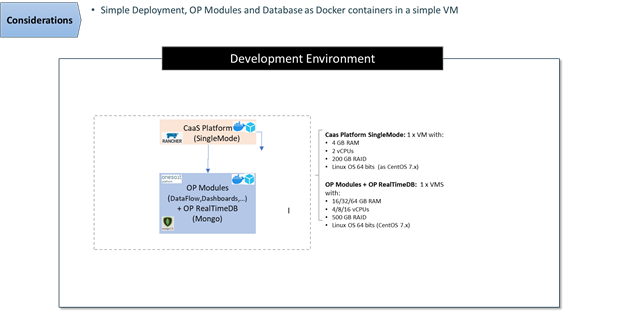

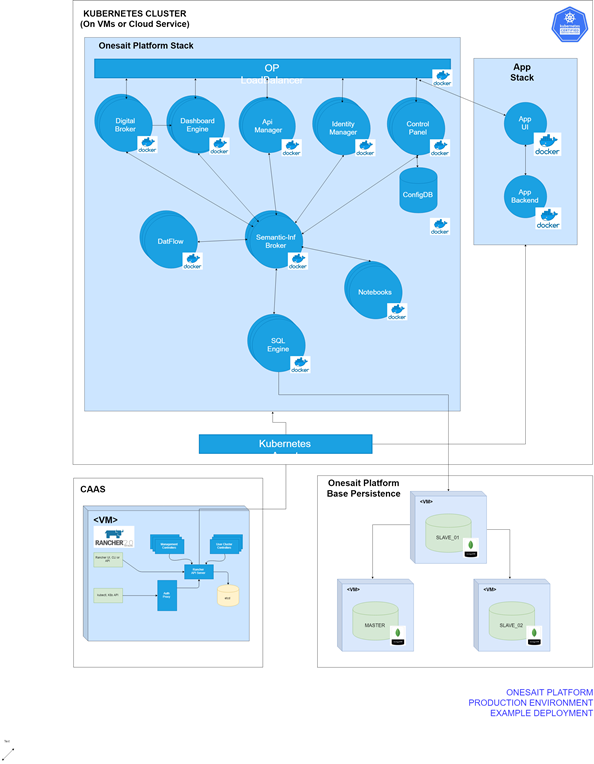

When we deploy the platform on VMS in Development Environments, the platform deployment proposal is like this:

Cuando desplegamos la plataforma sobre VMS en Entornos de desarrollo la propuesta de despliegue de plataforma es como esta:

- CaaS Environment (Rancher 1.6): Single-Instance deployment: 1 small VM (2 cores and 8 GiB) since CaaS unavailability does not affect the operation.

- Worker nodes (Platforma:

- Containerized deployment of all modules including persistence and reverse proxy or balancer.

- Deployment in 1 VM according to project needs and modules to be deployed:

- Basic environment: 1 VM with 4 cores and 16 GiB RAM and 256 GiB disk.

- Typical environment: 1 VM with 8 cores and 32 GiB RAM and 512 GiB disk.

- High load environment: 1 VM with 16 cores and 64 GiB RAM and 1 TiB disk.

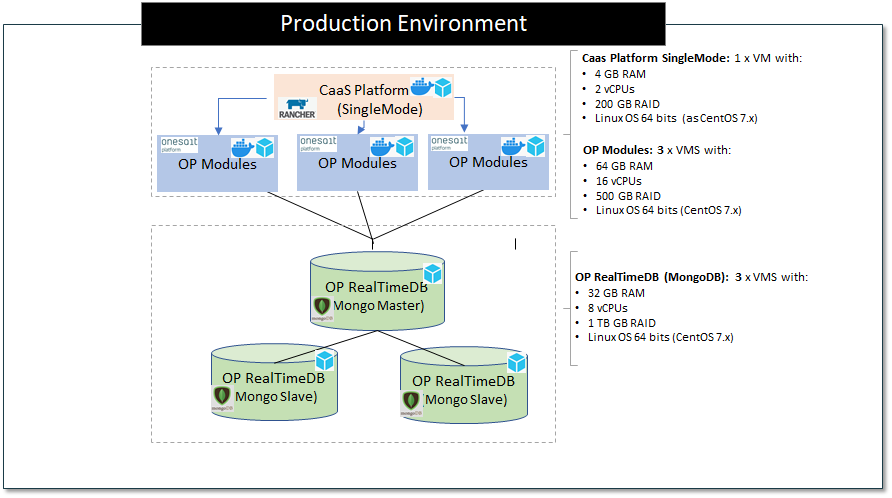

In productive environments, the platform deployment proposal contemplates:

- Reverse Proxy / Load-Balancer: either a Load-Balancer from the chosen cloud provider, or the Platform Reverse Proxy (NGINX) or a HW Balancer.

- CaaS environment (Rancher): depending on the HA required, it can be set up in a Single-Instance or in a cluster (3 VMS) with the externalized database.

- Worker nodes (Platform):

- Containerized deployment of platform modules without including persistence.

- Deployment in at least 3 VMs according to project needs and modules to be deployed:

- Basic environment: 2 VM with 4 cores and 16 GiB RAM and 256 GiB disk.

- Typical environment: 2/3 VM with 8 cores and 32 GiB RAM and 512 GiB disk.

- High load environment: 3 VMs with 16 cores and 64 GiB RAM and 1 TiB disk.

Openshift

Openshift is based on different Open Source components such as:

- Kubernetes, as a container orchestrator.

- Prometheus for metric collection.

- Grafana for visualization of the different metrics of the system.

- Quay as Docker image registry.

- RHCOS / RHEL.

The physical architecture of Openshift in terms of minimum machine infrastructure in HA requires three VM’s for the master nodes and three VM’s for the processing nodes or worker nodes

The choice of Openshift depended on several important points:

- Support: both from the operating system of the nodes in which it is deployed, and from Openshift itself, going through all the software components that they certify and that can be used by the Operators.

- Support: All levels of support are offered by Red Hat.

- Security: Openshift pays special attention to security requirements, such as not allowing pods to be deployed as root.

- Training: and official certifications both in the administration and operation part and in the development part on Openshift.

- CI/CD: Integrated into the Platform, either with native S2i (source to image) or with Red Hat certified Jenkins Operators.

- Subscription: A single subscription enables multiple installations across public or private clouds and even on premise.

The migration of Managed Solutions with dedicated VMs and Rancher 1.6 to Openshift will be done progressively and upon request.

Projects

In the case of those clients/projects that do not have a defined CaaS strategy, do not have the necessary knowledge about existing containerization/orchestration technologies or, due to cost savings, cannot afford an Openshift license, the following scenarios are proposed implementation of the Platform.

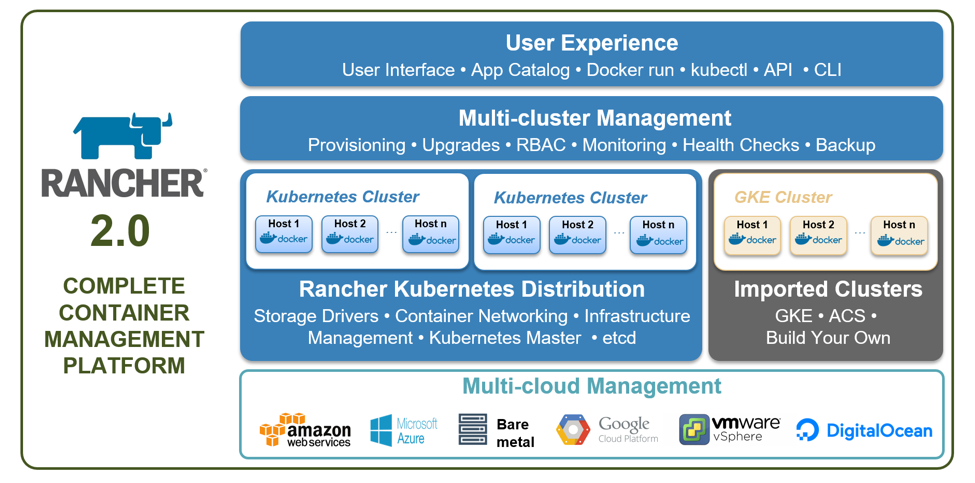

Rancher 2 + Kubernetes

The strategy to follow in projects that do not have a CaaS is to install it together with the onesait Platform. The evolution in this case has been from Rancher 1.6 as CaaS, and Cattle as container orchestrator to Rancher 2 and Kubernetes. The move from Rancher 1.6 to Rancher 2 is due to Rancher 1.6 reaching EOL on June 30th, 2020.

The main features of Rancher 2 include the following ones:

- It is 100% Open Source and free (no support).

- Supports Kubernetes up to version 1.17.X (depending on whether it is managed with RKE or imported).

- It offers RKE as an installation engine for Kubernetes clusters in a very simple way.

- Integrates with existing Kubernetes clusters, on cloud or on premise.

- It integrates with Kubernetes clusters provided as services by the different clouds (AKS, EKS, GKE, OCI).

- Integrated metrics and their visualization with Prometheus and Grafana.

- Integrated CI/CD.

- Extensive catalog of tools integrated and packaged with Helm.

- They optionally offer two types of support: Standard and Platinum with 4 severity levels each, 8×5 and 24×7.

As in versions 1.6 of Rancher, the installation of both the base software and the CaaS and deployment of the Platform is managed with Ansible in the following way:

- Installation of the base software (Docker, docker-compose, additional packages).

- Installation of CaaS and deployment of Kubernetes with RKE.

- Deployment of the Platform in Kubernetes with Helm.

- Installation of orderly startup and shutdown scripts for those environments in which they require, for cost savings, the shutdown of the infrastructure at night and on weekends.

Regarding the infrastructure recommended for development and production, it does not vary with respect to what was previously detailed for the case of Solutions. For the development case:

- CaaS environment (Rancher 2 + etcd + Control Plane): deployment in Single-Instance: 1 small VM (4 cores, 8 GiB of memory and 256GiB of disk) since a CaaS unavailability does not affect the operation.

- Worker nodes (onesait Platform):

- Containerized deployment of all modules including persistence and reverse proxy or balancer exposed through Ingress.

- Deployment in 1 VM according to project needs and modules to be deployed:

- Basic environment: 1 VM with 4 cores and 16 GiB RAM and 256 GiB disk.

- Typical environment: 1 VM with 8 cores and 32 GiB RAM and 512 GiB disk.

- High load environment: 1 VM with 16 cores and 64 GiB RAM and 1 TiB disk.

For productive environments:

- Reverse Proxy / Load-Balancer: either a Load-Balancer from the chosen cloud provider, or the Platform Reverse Proxy (NGINX) or a HW Balancer.

- CaaS environment (Rancher): depending on the HA required, it can be set up in a Single-Instance or in a cluster (3 VMS) with the externalized database.

- Worker nodes (Platform):

- Containerized deployment of platform modules without including persistence.

- Deployment in at least 3 VMs according to project needs and modules to be deployed:

- Basic environment: 2 VM with 4 cores and 16 GiB RAM and 256 GiB disk.

- Typical environment: 2/3 VM with 8 cores and 32 GiB RAM and 512 GiB disk.

- High load environment: 3 VMs with 16 cores and 64 GiB RAM and 1 TiB disk.

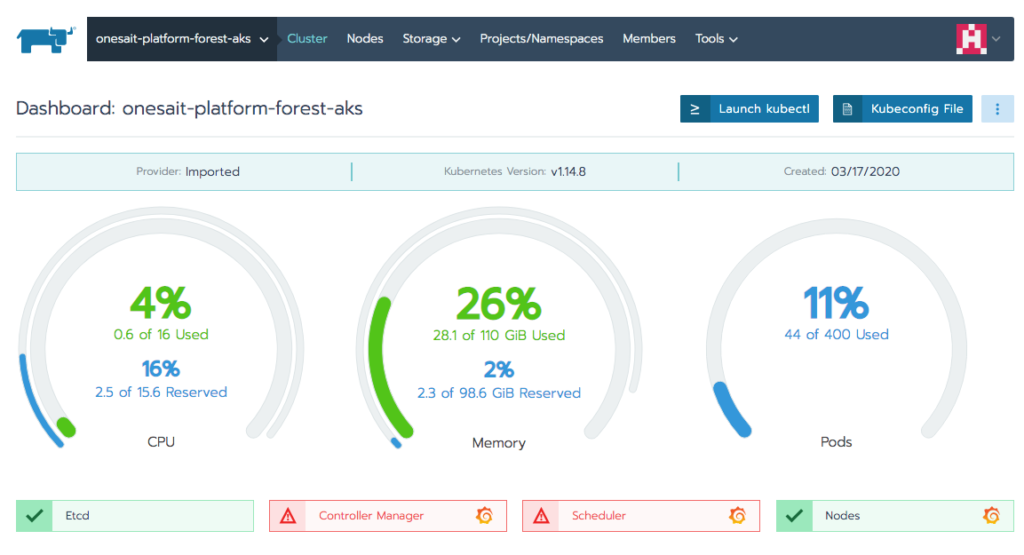

Rancher 2 + Kubernetes as a service (AKS/EKS/GKE)

For those clients/projects that already have a Kubernetes cluster, either on premise or as a cloud service, we recommend to mount Rancher 2 as a centralized deployment and monitoring console, on an additional vm, separate from the cluster.

This Rancher 2 node will import the existing cluster where the namespaces and the different workloads can be viewed – in addition to being able to perform new deployments, startup, shutdown and scaling/descaling of them, etc.

Rancher 1.6 + Cattle – “legacy” environments

Apply what has already been detailed for the case of Solutions with Rancher 1.6.

As you can see, our strategy is advanced and adapted according to needs. We hope it has been of your interest, and any question or doubt that may arise, you can leave us a comment.

Confluence | onesait Platform deployment strategy